Notes taken by Luizo ( @LuigiBrosNin on Telegram)

- Exam info

- project followed by Gabrielli and Zingaro, easy exam (at least as Gabrielli says) group of 1→4 people, ideally 3, but they’re flexible

- discussion is about the project mainly, and important theory stuff, but not much in depth

- Zingaro said there’s no discussion and no theory asked, nice

Theory

Intro

Artificial Intelligence Definitions (informal)

- Turing’s imitation game test

- “Knowing what to do when you don’t know what to do”

- Models that are designed to generate new content, such as images, text, audio, or even video (Generative AI)

- Thinking/Acting humanly, Thinking/Acting rationally

Artificial General Intelligence (AGI) → AGI is envisioned as having the capability to perform any intellectual task that a human being can do

To solve problems with AI, there’s not a defined algorithm, but we train (a neural network) to adjust the model’s result trough labeled data (basically data with solutions). In Training phase each neuron has a simple function where is is a real number, the weight of on the final decision (output). Comparing the will make sure that the error will be lower. There are different measures to attest the efficiency of the model, one of them is accuracy, but there are many others that have to be used case per case. as accuracy is not always a good metric.

3 types of AI

- Symbolic AI → Rule based systems

- ..

- ..

Neural Networks

i’m following the lessons without notes from now on, as there are some notes on Carta Binaria i could study into, i might still do summaries and exam resources. as usual i recommend Corigliano’s notes (italian notes).

Summaries

Intelligent agents

Agents function (History → Action) Rationality → choosing whichever action maximizes the expected value of the performance - exploration, learning, autonomy (not being successful) PEAS → Task environment

- Performance measure

- Environment

- Observable

- Deterministic

- Episodic

- Static

- Discrete

- Single-Agent

- Actuators → possible low level actions

- Sensors Agent types

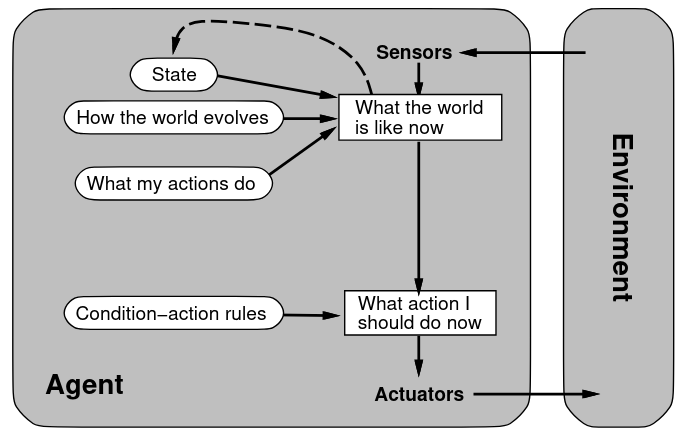

- Simple reflex agents (Condition → Action)

- Reflex agents with states (State → condition → action)

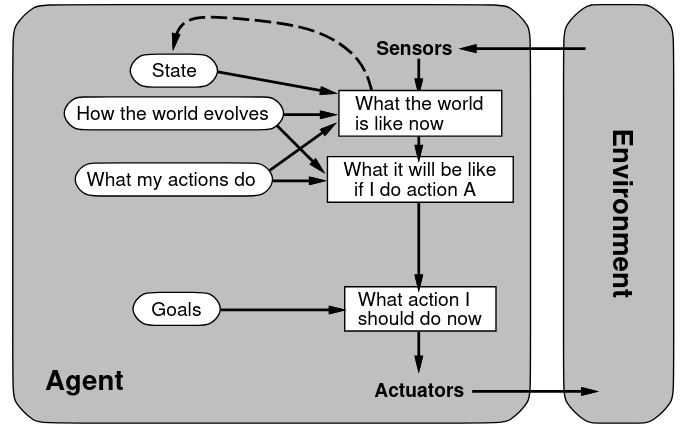

- Goal-based agents (State → prediction → action)

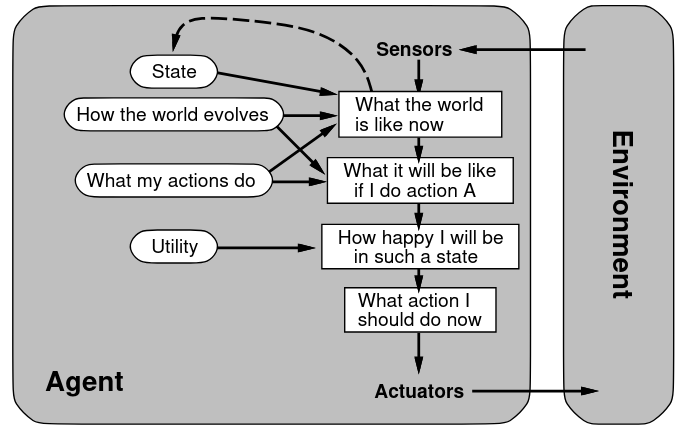

- Utility-based agents (State → prediction → maximize utility → action)

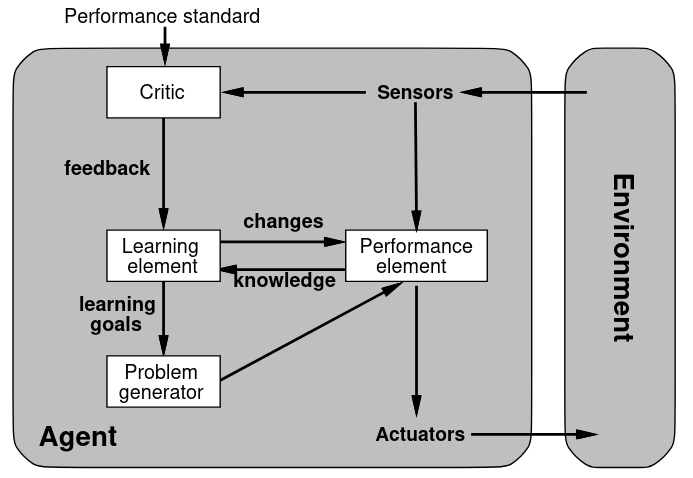

- Learning agents (feedback → changes → knowledge → learning goals)

Problem solving and search

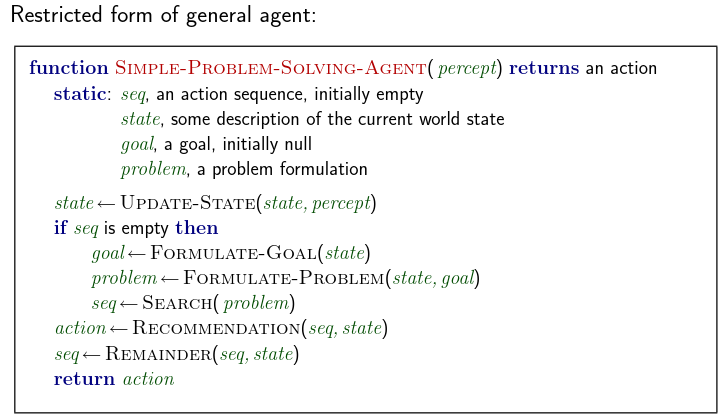

- General formalization of an agent

Problem types

- Deterministic, fully observable ⇒ single-state problem

- Non-observable ⇒ conformant problem

- Nondeterministic and/or partially observable ⇒ contingency problem

- Unknown state space ⇒ exploration problem (“online”) Problem formulation

- Initial state

- Successor function

- Goal test

- Path cost State space Abstract state → set of real states Abstract action → complex combination of real actions Abstract solution → set of real paths that are solutions irl

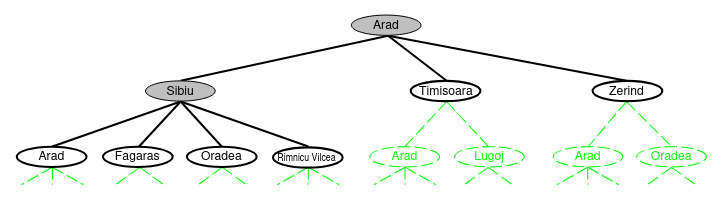

Tree-search algorythms → offline, simulated exploration of state space by generating successors of already-explored states

States v Nodes State → representation of a physical configuration Node → data structure constituting part of a search tree

Uninformed search strategies → use only the information available in the problem definition

- Breadth-first search

- Uniform-cost search

- Depth-first search

- Depth-limited search

- Iterative deepening search

Completeness → finds solution if one exists

Time complexity → number of nodes generated/expanded

Space complexity → max number of nodes in memory

Optimality → always least-cost solution?

→ max branching factor

→ depth of the least-cost solution

→ max depth of state space (can be )

⚠ Failure to detect repeated states can turn a linear problem into an exponential one

Completeness → finds solution if one exists

Time complexity → number of nodes generated/expanded

Space complexity → max number of nodes in memory

Optimality → always least-cost solution?

→ max branching factor

→ depth of the least-cost solution

→ max depth of state space (can be )

⚠ Failure to detect repeated states can turn a linear problem into an exponential one

Minizinc

- Declarative language → describe the problem → solve the problem

Exam Projects

- prompt engineering ideas

- break down the problem in simpler steps to make the LLM “think”

- expand some operations (eg. 8 → 3+5 = 8) if relevant in the problem

- point with the prompt (with screens or so) at errors so it’s able to find and fix them