3. Edge Detection

Edges seen as sharp brightness changes

Brightness changes and therefore edges are detected with threshold over the 1st derivative

Gradient approximation

in 2D, we calculate the gradient (vector of partial derivatives) that detects direction of the edge

- Magnitude = strength of edge

- Direction = towards brighter side We approximate the gradient by estimating derivatives with

- Differences (pixel difference)

- Kernels (same principle, using correlation kernels)

Noise workarounds

Noise causes false positives, so we smooth the image when detecting edges

Prewitt/Sobel ops make so we take into consideration the surrounding pixels to evaluate the edge Prewitt operator → take 8 surrounding pixels Sobel operator → likewise but central pixels weight 2x

Non-Maxima Suppression (NMS)

strategy of finding the local maxima in the derivative

- We need to find the gradient magnitude from the approximation

- We use Lerp from the discrete grid’s closest points To get rid of noise we apply a threshold

Canny’s Edge Detector

Standard criteria

- Good detection → extract edges even in noisy images

- Good localization → minimize found edge and true edge distance

- One response to one edge → one single edge pixel detected at each true edge

Canny’s Pipeline

- Gaussian smoothing

- Gradient computation

- Non-maxima suppression

- Hysteresis thresholding → approach relying on a higher and a lower threshold.

2nd derivative edge detection methods

Zero crossing = get point at 0 of 2nd derivative Laplacian operator (sum of second-order derivatives) to approx derivatives

Laplacian of Gaussian (LOG)

Pipeline

- Gaussian smoothing

- Apply Laplacian

- Find zero-crossings

- Get actual edge from where the abs value of LOG is smaller

Parameter sigma controls smoothing degree and scale of features to detect (we blur more if edges are “bigger”)

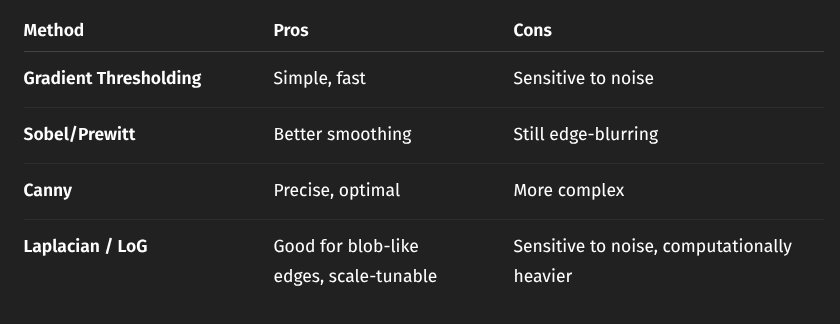

Comparison

4. Local Features

find Corresponding Points (Local invariant features) between 2+ images of a scene

Three-Stage pipeline

- Detection of salient points

- Repeatable, find same keypoints in different views

- Saliency, find keypoints surrounded by informative patterns

- Speed

- Description of said points (what makes them unique)

- Distinctive - Robustness Trade-off, capture invariant info, disregard noise and img specific changes (light changes)

- Compactness, low memory and fast matching

- Speed

- Matching descriptors between images

Corner detectors

corners are the perfect keypoints as they have changes in all directions

Moravec Interest Point Detector → Look at patches in the image and compute cornerness (8 neighboring patches, look for high variation)

Harris Corner Detector

- Compute image gradients (how intensity changes)

- Build the structure tensor matrix

- Compute the corner response

- Threshold & NMS to pick the best corners

Harris invariance properties

- Rotation invariance

- Partial illumination invariance (if contrast does not change)

- No scale invariance

Scale-Space, LoG, DoG

Key finding → apply a fixed-size detection tool on increasingly down-sampled and smoothed versions of the input image (trough Laplacian of Gaussian or Difference of Gaussian, its approximation) (LoG, DoG)

Scale-space → group of the same image at different computed smoothed scales Where:

- G is the Gaussian kernel

- controls the scale (blur amount)

- is convolution

Multi-Scale Feature Detection (Lindeberg) → makes us find which feature to extract at what scale

- Use scale-normalized derivatives to detect features at their “natural” scale.

- Normalize the filter responses (multiply by )

- Search for extrema (maxima or minima) in x, y, and i.e., in 3D with LoG.

LoG → second order derivative that detects blobs (circular structures) DoG → approximation of LoG We build a pyramid of images blurred with different and we compute the difference to find the extrema in 3D across (x,y, scale)

- We reject low contrast responses

- We prune keypoints on edges using the hessian matrix We get the optimal scale for each detail

DoG invariance

- Scale invariance

- Rotation invariance (compute canonical orientation so that we have a new reference system different from the image’s)

SIFT Descriptor

Scale Invariant Feature Transform → used to generate descriptors to match, outputs a feature vector based on grid subregions (takes small details from around the keypoint and remembers gradient orientation combinations)

Matching process

find closest corresponding point efficiently, classic Nearest Neighbour problem

- distance used is the euclidean distance

- ratio test of distances to eliminate 90% of false matches (distance to best match/second best), small ratio = confident match Indexing techniques are exploited for efficient NN-search

- k-d tree

- Best Bin First

5. Camera Calibration

We need to measure 3D info accurately from the 2D img Camera calibration → determining a camera’s internal and external parameters (focal length, distortion / position, orientation).

Perspective projection

3D point projected into 2D image point Function:

where: → focal length → depth (distance from the camera) This projection is non-linear, aka objects appear smaller with distance, all the rules of perspective

Projective Space

we need to handle points at infinity Projective space () → 4th coordinate for each point in 3D, , 0 means point is at infinity Express perspective projection linearly using matrix multiplication:

- : 3D point in homogeneous coordinates

- : projected 2D image point

- : Perspective Projection Matrix (PPM) Canonical PPM (assuming )

Image Digitization

continuous measurements into discrete pixel size, img origin Steps

- Scale by pixel dimensions

- Shift the image center to pixel coordinates Intrinsic Parameter Matrix → captures internal characteristics of the camera Where:

- →: focal length in horizontal pixels

- → focal length in vertical pixels

- → skew (typically 0 for most modern cameras)

- → image center

CRF = Rotation matrix WRF + Translation vector

Homography

flat scene whose projection we can simplify to an homography Where:

- → 3x3 matrix representing the homography

- → 2D coordinates in the plane + 1 in homogeneous coordinates (quadruple) Simplifies calibration, holds info about intrinsic parameters

Lens distortion

Barrel → outward bending Pincushion → inward bending we need to model non-linear functions to correct the image

What is calibration

Calibration estimates:

- Intrinsic parameters → Capture multiple images of a known pattern (e.g., chessboard)

- Extrinsic parameters → Find 2D-3D correspondences (image corner ↔ real-world corner)

- Lens distortion coefficients → projection equations

Zhang’s method

A Practical way to calibrate a real camera using images of a flat pattern

- use a flat target (all )

- form pairs, get homographies

- Each homography relates image coordinates to pattern coordinates 4.5 points per image needed to compute

Summary

| Concept | What It Represents |

|---|---|

| Intrinsic matrix (focal lengths, image center, skew) | |

| Camera pose (extrinsics) | |

| Homography | 2D projective mapping for planar scenes |

| Distortion | Lens imperfections modeled with parameters |

| Zhang’s Method | Practical way to calibrate a real camera using images of a flat pattern |